Challenger, 40 Years On: Have We Learned Anything?

On the morning of 28 January 1986, ice hung from the launch tower at Cape Canaveral. Engineers knew the temperature was unusually low, colder than anything the Space Shuttle programme had experienced before. They also knew that parts of the shuttle’s solid rocket boosters behaved unpredictably in the cold.

Seventy-three seconds after launch, Challenger disintegrated in front of a watching world.

Forty years later, the video footage from that day still feels unreal. The clean white plume splitting into two forks. The brief pause before comprehension catches up. The dawning understanding that something irreversible has just happened.

Seven astronauts were killed. A generation lost its innocence about spaceflight, and NASA was forced to confront an uncomfortable truth: the shuttle had not failed because of a freak accident, but because known risks had been accepted, normalised, and ultimately ignored.

The question worth asking, four decades on, is not what went wrong mechanically. We know that story well enough. The harder question is whether we actually learned what Challenger was trying to tell us.

The People We Lost

Before Challenger became a lesson, it was a routine mission with seven astronauts who expected to come home.

Francis “Dick” Scobee, the mission commander, was a veteran test pilot who believed deeply in the shuttle programme and its promise, steady under pressure and trusted by the people who flew with him. Michael Smith, the pilot, was on his first spaceflight, meticulously prepared for a career that never had time to unfold, the kind of flier who lived in the details because details mattered. Judith Resnik, an electrical engineer, had already flown once and represented the technical excellence NASA relied on, intensely capable and at home with the systems that made the space program work. Ronald McNair, a physicist and an accomplished musician, carried with him the quiet defiance of a boy raised in segregated South Carolina, the same boy who once refused to leave a whites-only library without his science books. Ellison Onizuka, a test pilot and engineer, was the first Asian American in space, and he wore that distinction lightly, letting his work speak for him. Gregory Jarvis, a payload specialist, had waited years for his chance to fly, living with delays and reshuffles that would have dulled anyone’s patience, but not his. Christa McAuliffe, a teacher and civilian, carried with her the idea that space belonged not just to astronauts, but to everyone, and the expectation that a classroom could be part of the mission too.

They were not reckless. They were not unaware. They trusted the system they worked within. That trust matters. Astronauts accept risk as part of their profession, but they do so on the assumption that known dangers will be surfaced, debated, and treated seriously. Challenger was not a case of fate overwhelming preparation. It was a case where human judgement failed to honour that trust.

Remembering the crew is not about sentimentality. It is about responsibility. When organisations talk about risk in abstract terms, they create distance from consequence. Challenger reminds us that every technical trade-off ultimately maps to human lives.

That is why the disaster still resonates. Not because rockets fail, but because institutions failed people who had every reason to believe they were being protected by the best decisions available

The Failure That Wasn’t a Surprise

The immediate cause of the Challenger disaster was a failed O-ring seal in the right solid rocket booster. These rubber seals were meant to prevent superheated gases from escaping during launch. On that January morning, the cold made the O-rings stiff and slow to respond. Hot gas leaked through the joint, burned through structural supports, and ruptured the external fuel tank.

That explanation fits neatly into a diagram. It fits less neatly into reality.

The truth is, the risk was known. Engineers at Morton Thiokol, the contractor responsible for the solid rocket boosters, had raised concerns for months about O-ring erosion, particularly in colder conditions. On the night before launch, they warned plainly that the forecast temperatures could push the joint beyond its safe limits. During a late-night teleconference, they argued for a delay.

Management stepped away for an internal discussion, returned with a different recommendation, and NASA took it as the green light to proceed.

This is the part of the story that still matters most. Challenger was not destroyed by ignorance. It was destroyed by a decision.

How Risk Became Routine

In the years leading up to 1986, the shuttle programme had become a victim of its own success. Flights that should have been exceptional became routine. Anomalies that should have triggered an alarm became data points to be managed.

O-ring erosion had been observed before. It had not caused a disaster. Each successful flight subtly redefined what was considered acceptable. What began as a serious concern gradually became a tolerable deviation.

This process has a name: normalisation of deviance. It is how organisations convince themselves that tomorrow will look like yesterday, even when the warning signs are right in front of them. Add schedule pressure, political scrutiny, and the symbolic weight of flying the first teacher into space, and the launch decision becomes easier to understand, if not to excuse. The system rewarded progress, not hesitation.

When engineers objected, their warnings were framed as caution rather than urgency. Their data was incomplete. Their arguments were not decisive enough. Management wanted proof of failure, not evidence of risk.

That inversion, demanding certainty where none can exist, lies at the heart of many technological disasters.

After the Disaster

The shuttle fleet was grounded for nearly three years. NASA redesigned the booster joints, reworked its safety processes, and subjected itself to intense public and political scrutiny.

The Rogers Commission report was blunt. It concluded that NASA’s organisational culture had played as much a role as any mechanical failure. Communication had failed. Management had overridden engineering judgement. Safety had been compromised by institutional pressure.

At the time, the lesson seemed clear. Fix the hardware, yes, but more importantly, fix the culture.

For a while, it appeared that the lesson had stuck.

Columbia and the Sound of an Echo

Seventeen years later, on 1 February 2003, Space Shuttle Columbia broke apart during re-entry, killing its seven-person crew.

The cause was different. A piece of foam insulation struck the shuttle’s wing during launch, damaging the heat shield. On re-entry, plasma tore through the compromised structure.

Once again, the deeper story was not about foam.

During the post-launch assessment, engineers had spotted the strike. They were worried. They asked for additional orbital imaging to assess the damage. Those requests were denied or deferred. Management judged the risk to be acceptable based on previous flights that had survived similar impacts.

The language had changed. The technology had changed. The pattern had not. Foam shedding had become routine. Damage that had not yet killed a crew was treated as survivable by default. The absence of disaster was mistaken for evidence of safety.

When the Columbia Accident Investigation Board published its findings, the parallels with Challenger were impossible to ignore. Once again, NASA was criticised not for technical incompetence, but for cultural blind spots. Once again, risk had been understood, discussed, and ultimately discounted.

The most unsettling conclusion was simple: NASA had not fully internalised the lesson it believed it had learned in 1986.

Why Learning From Failure Is So Hard

It is tempting to frame Challenger and Columbia as cautionary tales from a bygone era. Cold War hardware. Bureaucratic inertia. Lessons already absorbed by modern systems.

That temptation is dangerous.

Complex technological systems do not fail because people stop caring. They fail because humans are wired to underestimate rare risks, over-trust familiar systems, and defer to authority under pressure. No amount of improved materials science changes that.

Organisations, especially successful ones, struggle to maintain a sense of vulnerability. Each safe outcome reinforces confidence. Each anomaly that does not end in catastrophe quietly lowers the threshold for concern.

Formal lessons fade faster than institutional memory admits. People retire, teams rotate, and new pressures emerge. What remains are the habits.

Spaceflight Today Feels Different. It Isn’t.

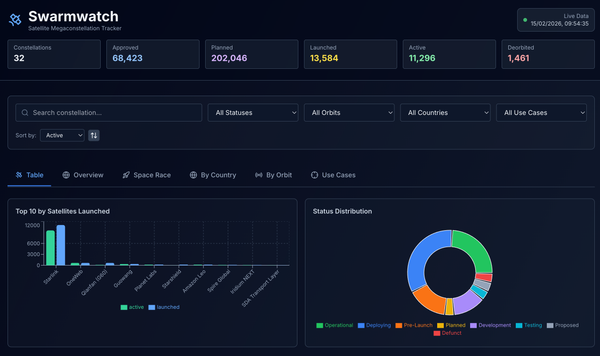

Today’s space industry looks nothing like NASA in the 1980s. Launch cadence is higher. Private companies dominate. Rockets land themselves. Failures are streamed live and dissected in real time.

The scale alone tells the story. By 2025, SpaceX was flying Falcon 9 at a pace of 165 missions in a single year, more than every other launch provider combined. China also maintained a remarkable pace, with 92 orbital launches. What was once exceptional is now routine. What once required years of preparation now happens weekly, sometimes daily.

Yet many of the underlying dynamics feel uncomfortably familiar.

Commercial operators pride themselves on rapid iteration and learning through failure. That philosophy has real strengths. It has driven down costs, accelerated innovation, and reshaped access to orbit. It also carries risk. When speed becomes identity, caution can start to look like obstruction. When iteration becomes routine, near misses can lose their emotional weight.

The difference between acceptable risk and institutionalised complacency is not always obvious in real time, especially when the scoreboard keeps flashing success.

Automation and AI promise better decision support, but they also introduce new failure modes. Humans may defer to systems they do not fully understand. Signals may be filtered, weighted, or normalised in ways that quietly obscure edge-case dangers. Responsibility can become diffused between human judgement and machine output, each assuming the other is watching closely.

None of this is an argument against progress. It is an argument against assuming that progress immunises us from old mistakes.

The Lesson Challenger Still Teaches

If Challenger teaches anything enduring, it is that safety is not a property of hardware. It is a property of behaviour.

It depends on whether dissent is welcomed or tolerated. On whether bad news travels faster than good news. On whether leaders ask uncomfortable questions when everything appears to be working.

It depends on resisting the quiet, seductive logic that says “it worked last time”.

Forty years on, the danger is not that we forget Challenger. The danger is that we remember it as history rather than as a pattern.

The launch pad was rimed with ice after that freezing night in January 1986, the coldest conditions ever seen for a shuttle launch. What was unusual was how many people noticed the risk and how few felt able to stop the countdown.

That remains the hardest lesson of all.